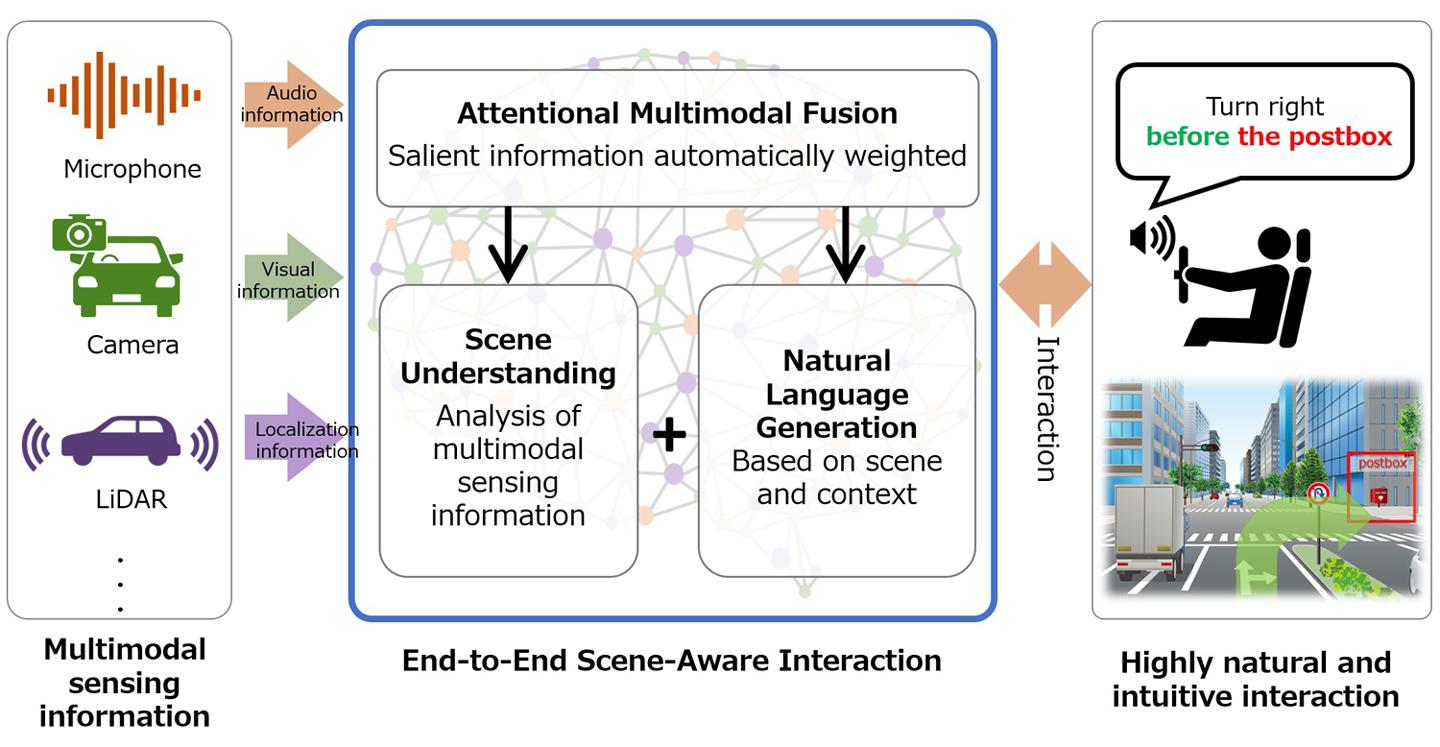

Mitsubishi Electric Corporation developed the world's first Scene-Aware Interaction technology. It is capable of highly natural and intuitive intercommunication with humans through the context-dependent generation of natural language. The technology incorporates the company's Maisart® compact AI technology to examine multimodal sensing information. This information consists of images & video captured with cameras, audio information recorded with microphones, and localization information measured with LiDAR.

The company has further developed the Attentional Multimodal Fusion technology, which is capable of automatically prioritizing and weighting salient unimodal information to help suitable word selections for describing scenes. In benchmark testing using a common test set, this technology used audio & visual information to accomplish a Consensus-Based Image Description Evaluation (CIDEr) score that was noticeably higher than in using visual information only. CIDEr is an evaluation metric that measures the comparability of a created sentence against a set of ground-truth sentences composed by people.

The blend of Attentional Multimodal Fusion with scene understanding technology and context-based natural language generation realizes a powerful end-to-end Scene-Aware Interaction system for highly intuitive interaction with users in various circumstances.

Recent advances in deep neural networks are empowering machines to better comprehend their surroundings and interact with humans more naturally and intuitively. Scene-Aware Interaction technology is expected to have wide applicability, including human-machine interfaces(HMI) for in-vehicle infotainment, interaction with robots, health monitoring systems, complex surveillance systems to encourage social distancing, support for touchless operation of equipment in public areas, and much more.

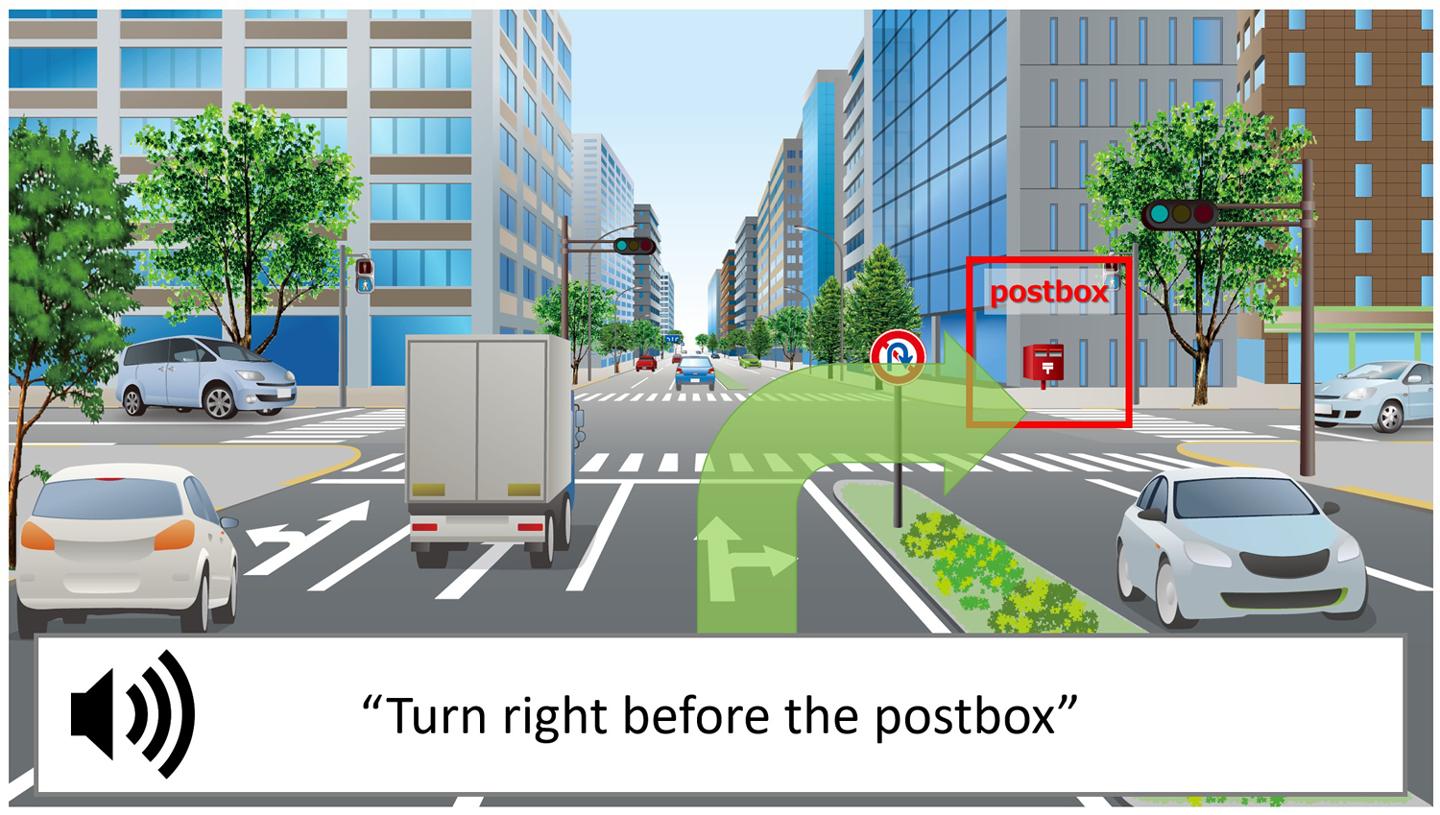

One such application in car navigation, will furnish drivers with instinctive route guidance. Generating voice warnings when nearby objects are anticipated to cross with the way of the vehicle. The system examines scenes to identify distinguishable, visual landmarks and dynamic components of the scene, and then utilizes those recognized objects and events to create intuitive sentences for guidance.

For more information: www.mitsubishielectric.com